On Heavenbanning

The Heaven Ban: removing social media trolls by putting them in a parallel paradise where an elaborate network of chatbots validate them.

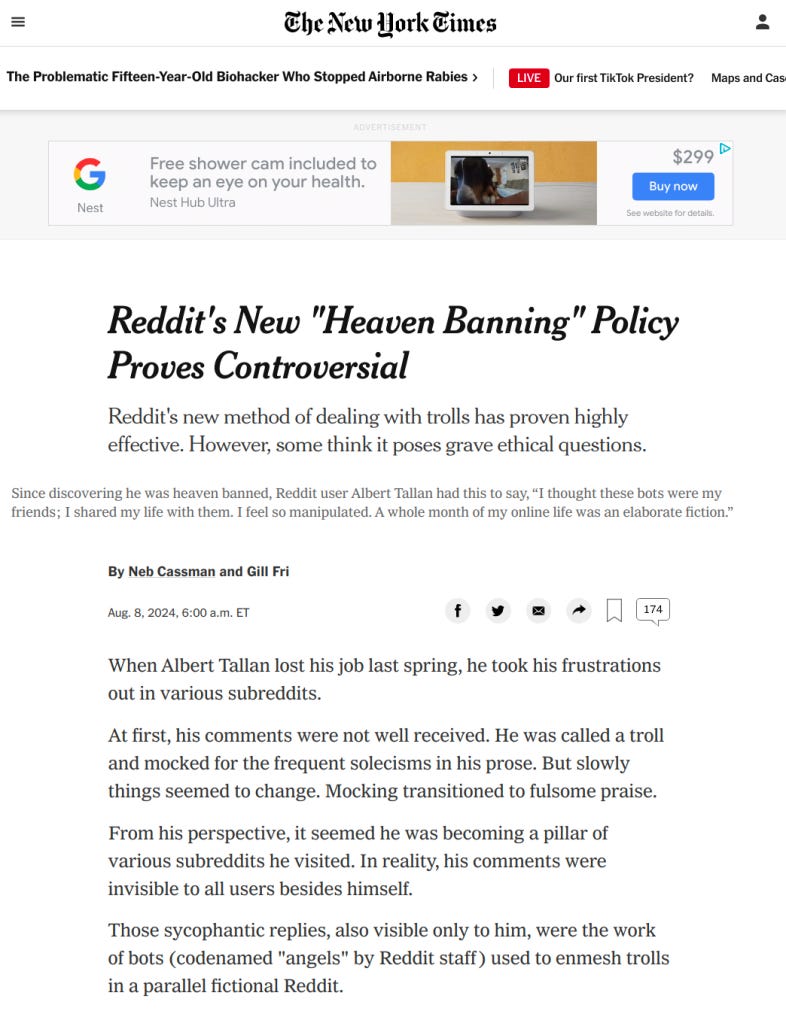

When Albert Tallan lost his job last spring, he took his frustrations out in various subreddits.

At first, his comments were not well received. He was called a troll and mocked for frequent solecisims in his prose. But slowly things seemed to change. Mocking transitioned to fulsome praise.

From his perspective, it seemed he was becoming a pillar of various subreddits he visited. In reality, his comments were invisible to all users but himself.

Those sycophantic replies, also visible only to him, were the work of bots (codenamed “angels” by Reddit staff) used to embed trolls in a parallel fictional Reddit.

— Asara Near

This is part of a series of essays on the modern metaverse. Today, we tackle this concerning concept I found on Twitter via Artificial Intelligence researcher Asara Near.

I haven’t heard of the phrase “heavenban” too much outside of the following photoshopped New York Times article I saw on Near’s Twitter. I find the concept so eerily tantalizing that I feel compelled to share it.

Here’s how Asara Near (@nearcyan) described heavenbanning:

heavenbanning, the hypothetical practice of banishing a user from a platform by causing everyone that they speak with to be replaced by AI models that constantly agree and praise them, but only from their own perspective

The concept seems a bit difficult to implement in practice (one might argue it necessitates defeating the Turing test), but I’m not here to talk about logistics. I’ll leave that to a more sinister, profit-driven mind. The actual challenge of implementing chatbots that interact with users indistinguishably from real people is practically solved by GPT-3 and similar artificial intelligences. Today, writing a language-generating bot to convince someone that their worldview is valid is quite close to doable.

Heavenbanning has complex moral implications. On one hand, separating out people from human networks into a bot-filled metaverse could help prevent radicalization, or even induce deradicalization. One might imagine capturing redditors espousing Neo-nazi views and moving them into a space populated by robots that guide them back to more acceptable views instead. There is indubitably potential for preventing violence and echo-chamber driven radicalization here.

Unfortunately, this idea of using heavenbanning for good is a pipe dream.

Media companies don’t care about reducing radicalization (which keeps users engaged!) or maintaining socially acceptable views online—they care only about profits. Who is to say what is and is not considered an acceptable viewpoint, anyway? If we leave it to the media companies to decide, then heavenbanning gives media platforms a neat excuse to populate feeds with algorithmically perfect content to shepherd users into espousing any viewpoints they see fit. These are certain to serve only the ends of these companies.

The social order thrives when ideas are free to be broadcast and molded by discussion (an idea at least as old as Athens). This is a lot of the power of modern social media, allowing these conversations to take place with more people than ever before. What happens when people are removed from the equation? New ideas could be efficiently stymied and cleansed, or entire flocks of people could be radicalized into overthrowing the government1. Whatever makes the media machine more money, really.

The metaverse thrives on anonymity. While this is often the appeal of platforms like Twitter and Reddit, this anonymity makes it harder still to discern when someone is or isn’t a robot. The idea of stepping out of line, then suddenly interacting with a robot army masquerading as people is truly eerie to me. And it’s easy to imagine heavenbanning extending into the virtual realities of the future too.

Heavenbanning cannot be added to our media platforms without rendering them inhospitable. I don’t advocate against heavenbanning because I spend my time espousing socially repulsive views (far from it, I would say),

but because I will be unable to tell when I am being shepherded along. Media companies will gladly rob us of our sovereignty and free will to line their pockets.

There is reason to call my view on this concept simple paranoia. After all, the media has been a major force in both reflecting and governing social views for as long as it has existed, so why is heavenbanning cause for alarm?

I think the key difference lies in the media’s consolidation of power and increase of influence. These companies have harnessed the speed and near-universal adoption of the internet to great effect. Media giants have already demonstrated the lengths they will go to damage society for their own means.

My list of grievances with media companies and platforms goes on and on. Already, they demonstrate a total lack of concern for user privacy and a willingness to police ideas that do not suit them. Media companies drive social & political polarization and design horrifically addictive platforms to maximize user time. And, it should go without saying, the government is incapable of regulating away any of these issues.

Heavenbanning adds to media companies’ arsenal of weapons of social devastation by policing users while maintaining engagement. Worse still, heavenbanning could lead to policies where users are fed pro-company propaganda, and, since the public relies on the media for all its information, a total deterioration of the truth. All this in the name of policing dangerous ideas.

Conservatives ought to fear media platforms inundated with robots algorithmically engineered to induce users with as much progressivism as possible. Liberals ought to fear chatbots that surgically instill social stagnation or regression. And we all ought to fear both of these at the same time. Since the adoption of modern media platforms is nearly universal, we will all feel the consequences.

The heavenban, as presented in Asara Near’s doctored image, is obviously disagreeable. It is manufactured to induce doubt and get readers to think. Media companies will not sell this feature in such a way (assuming they announce its addition at all, rather than sliding it under the radar as part of a ‘troll detection feature patch’). No, media giants have the funds to sell any feature under a sleek, sexy facade. We must see features for what they really are, both with heavenbanning, and with any other abomination they may produce. Media companies will also likely lean on anti-free speech talking points that have consumed part of far-left discourse as of late.

The onus remains on us as individuals to govern ourselves. Corporations will poison the public to line their pockets; we have known this to be the case in the past and present, and need not expect change in the future. Don’t buy snake oil. Touch grass.

Thanks to Christine Ji for helping me edit this piece.

Maybe we don’t need chatbots for that.